Multispeaker/speaker adaptation same voice comparison

Nikolaos Ellinas, Myrsini Christidou, Alexandra Vioni, June Sig Sung, Aimilios Chalamandaris, Pirros Tsiakoulis and Paris Mastorocostas

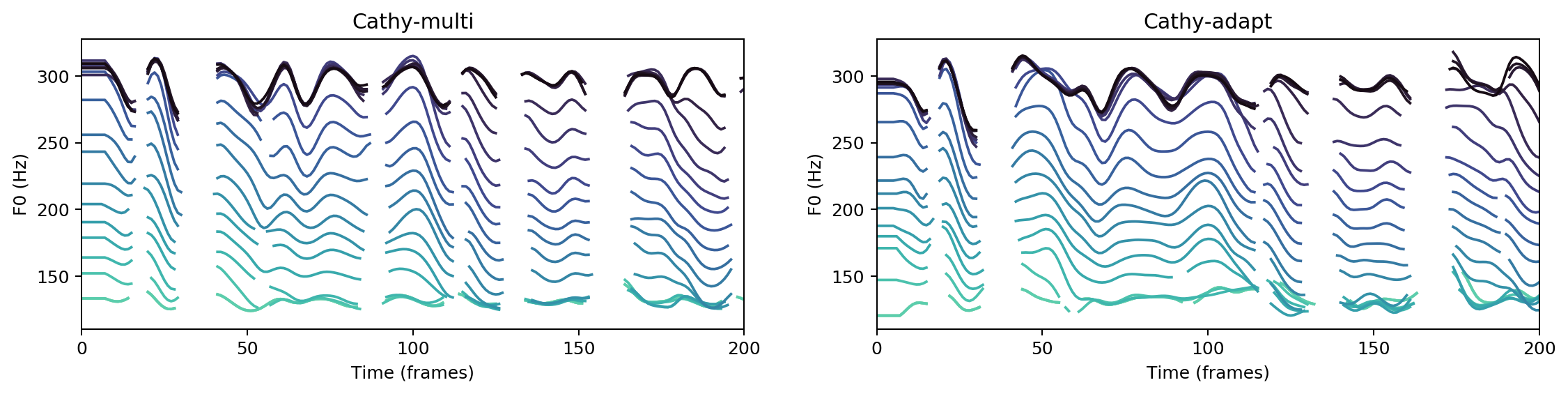

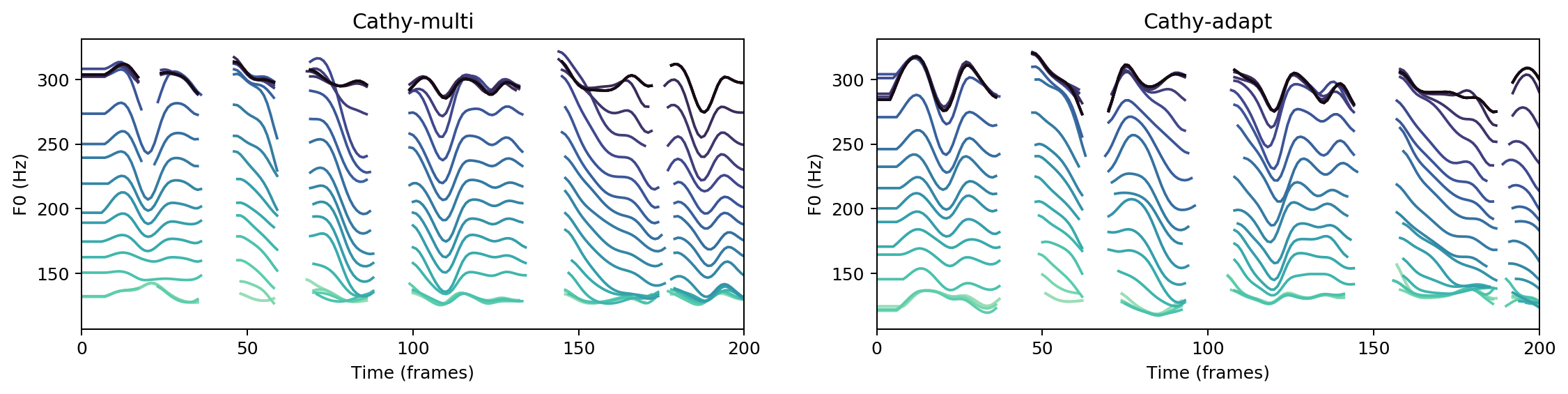

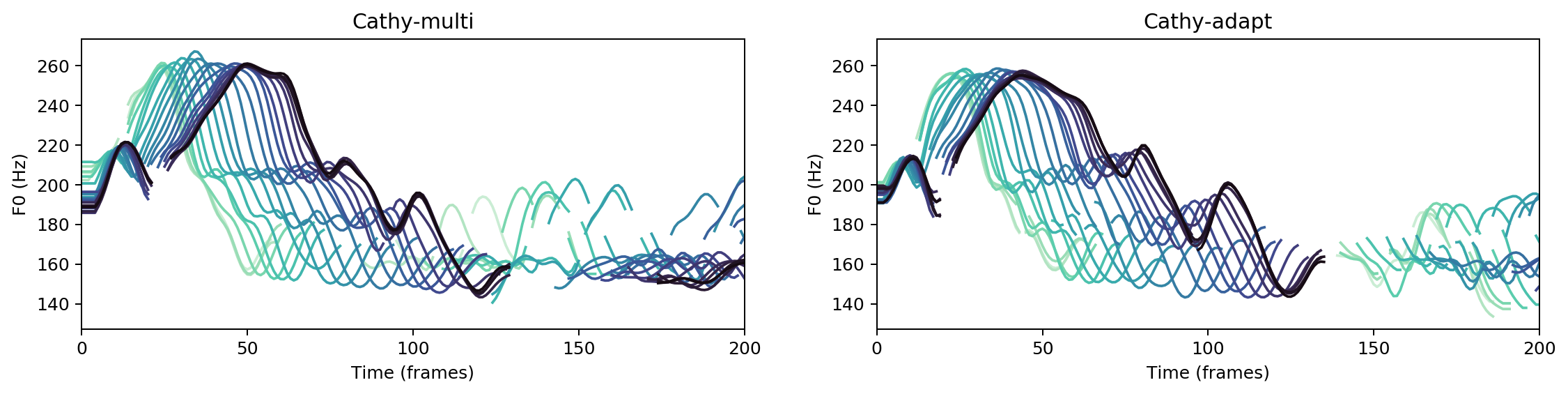

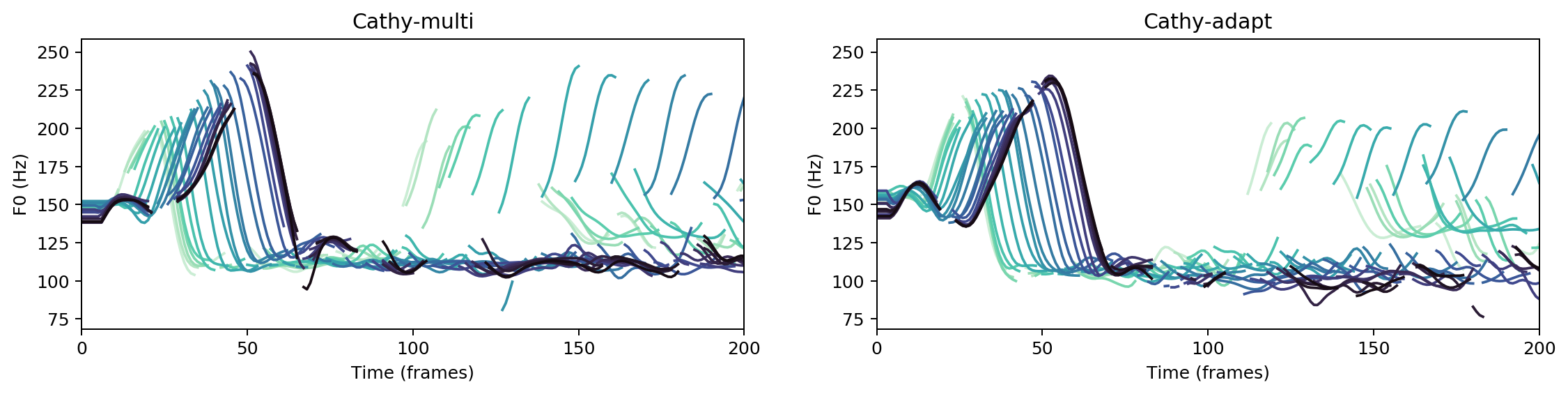

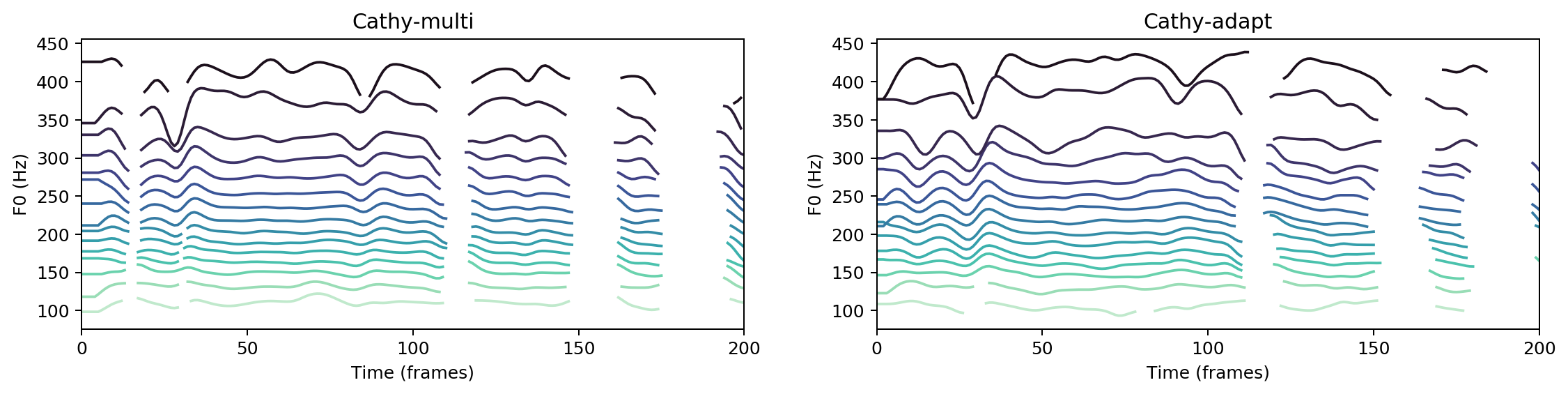

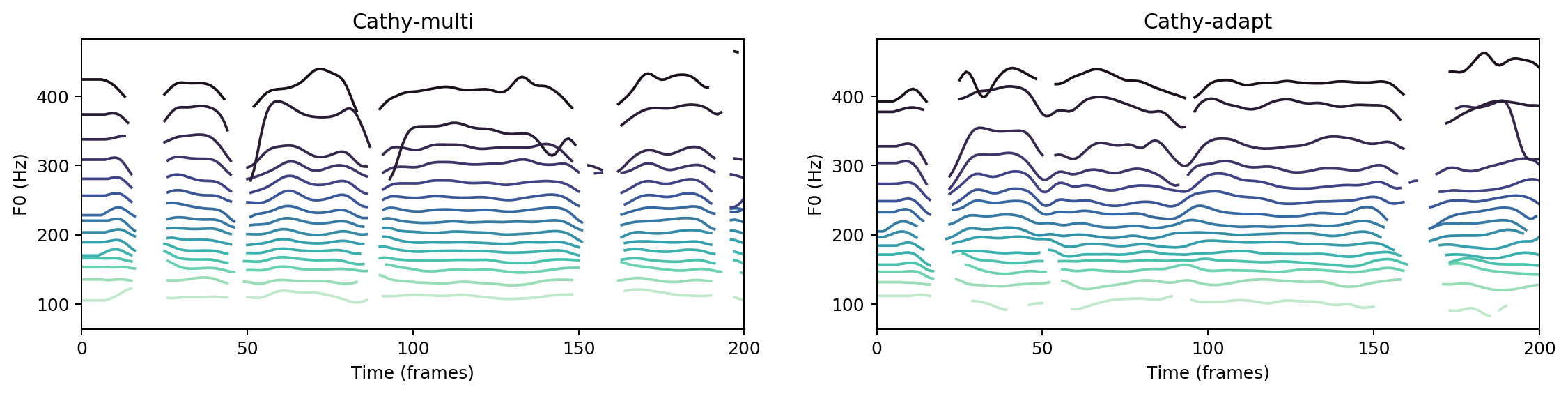

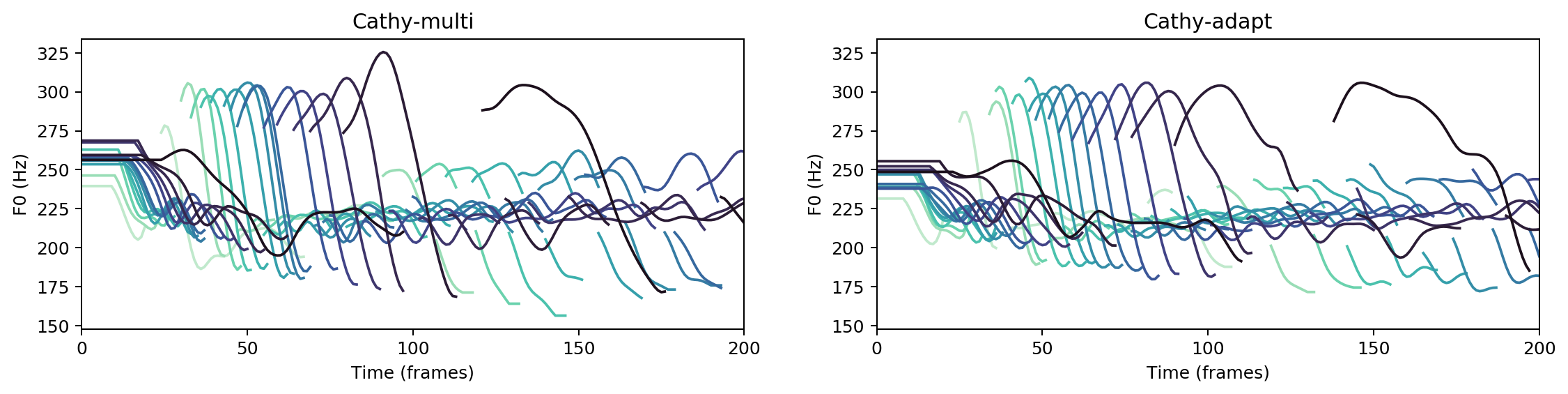

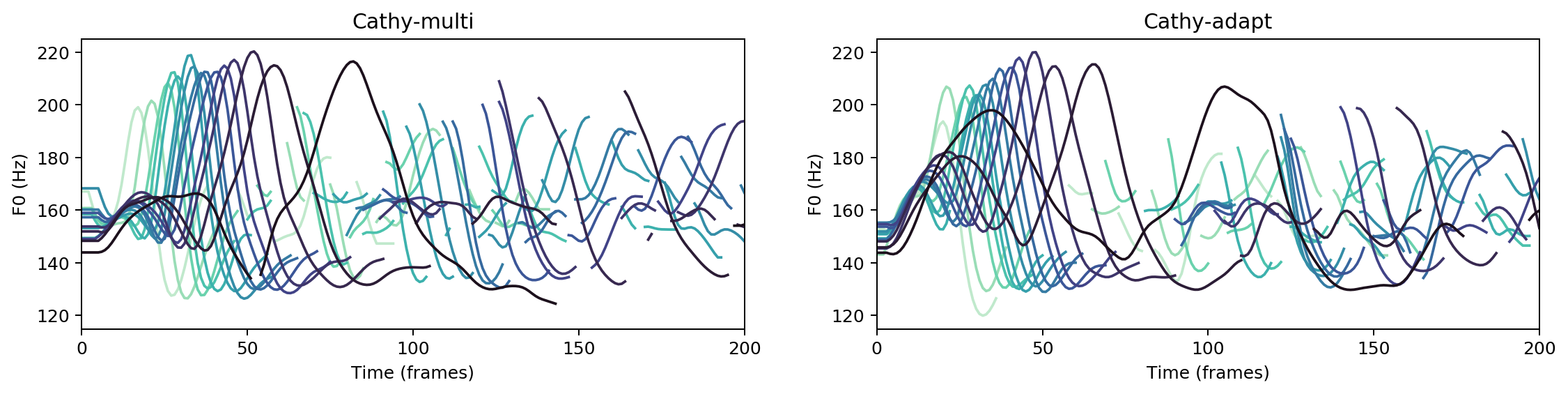

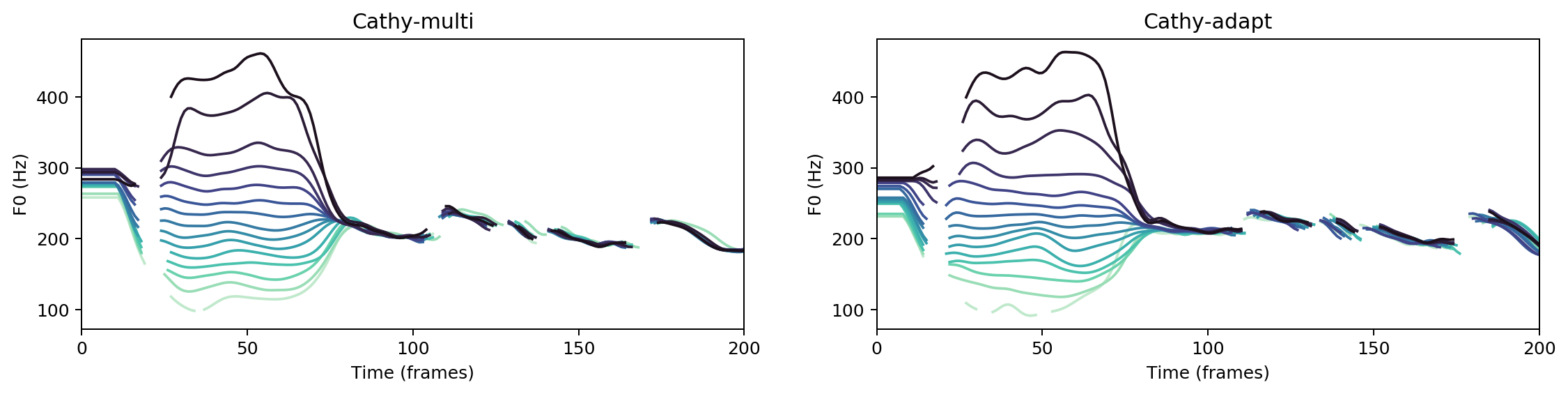

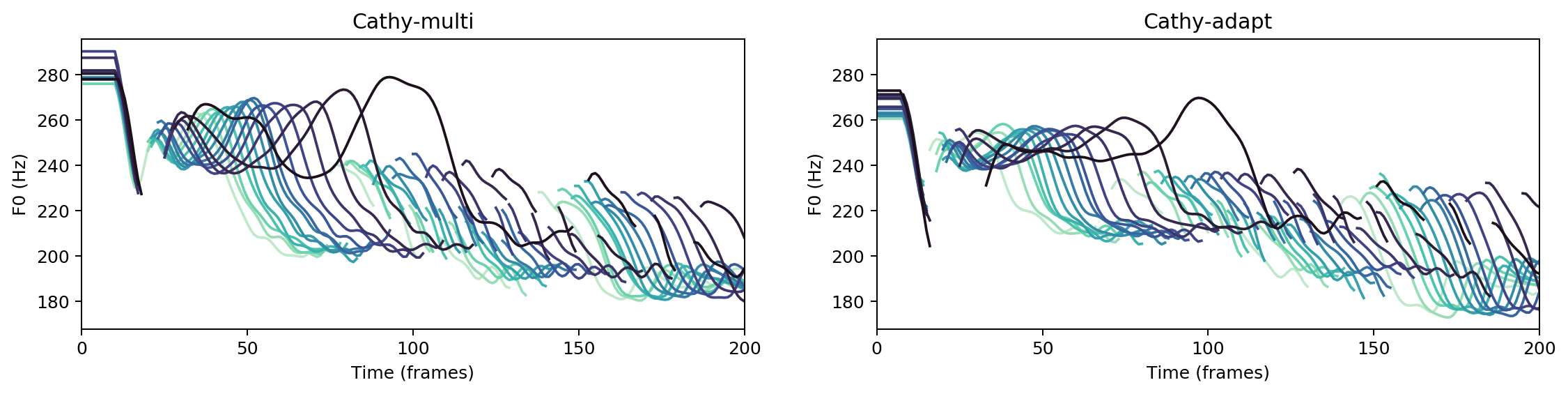

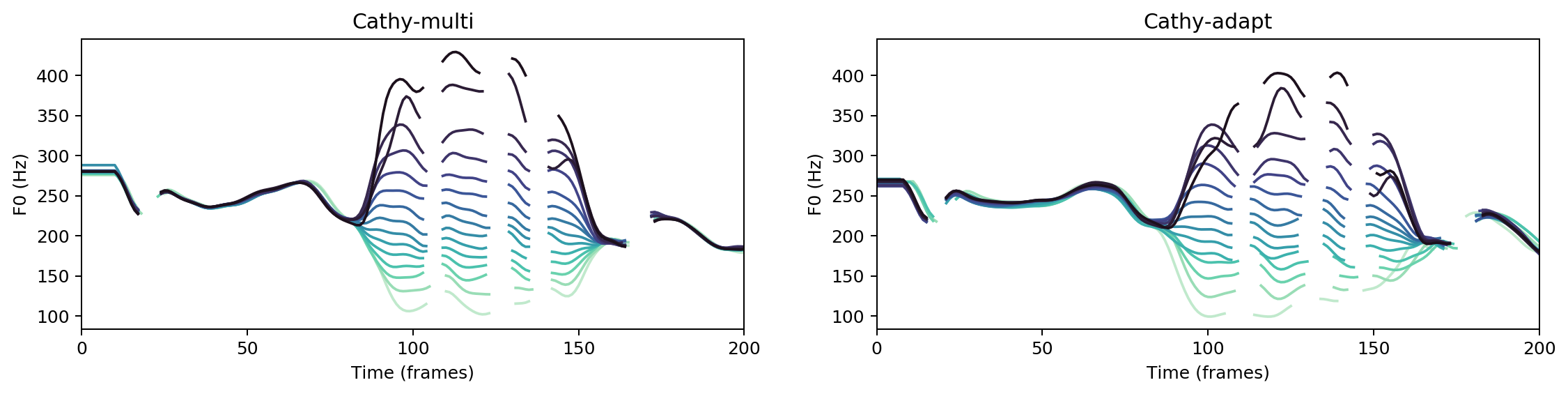

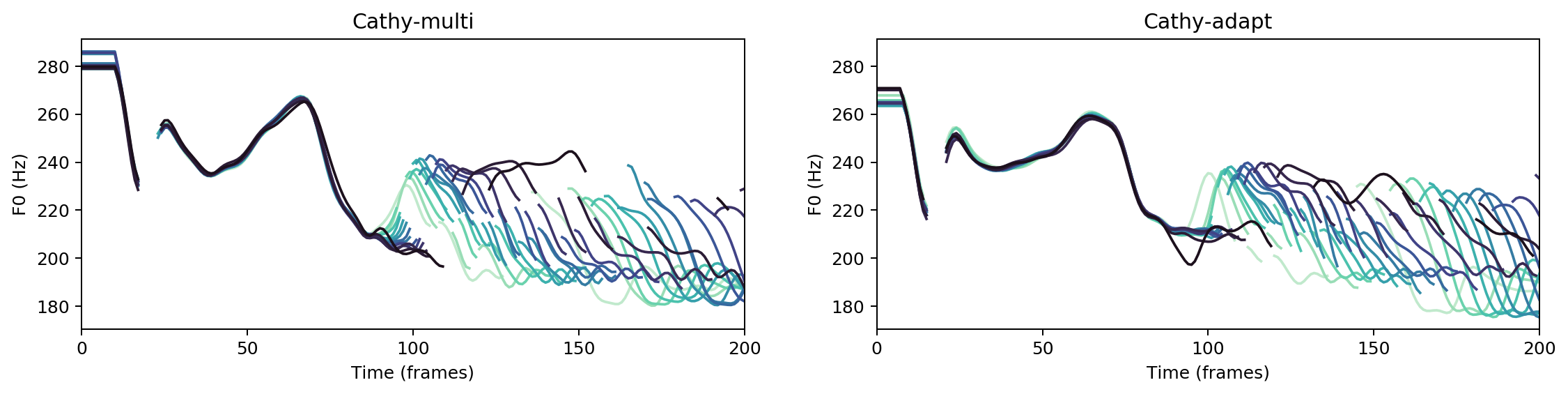

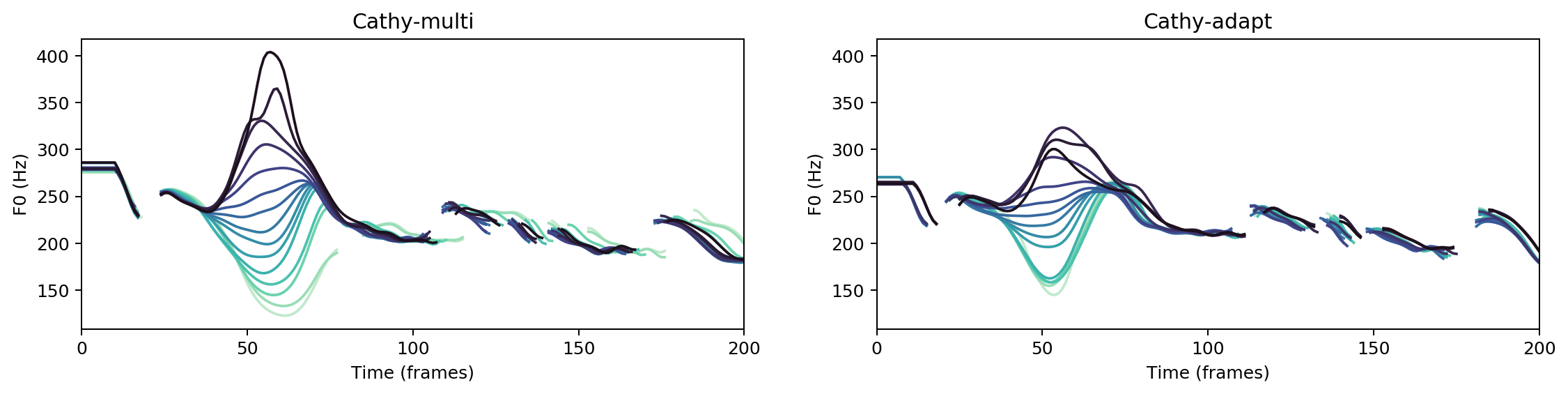

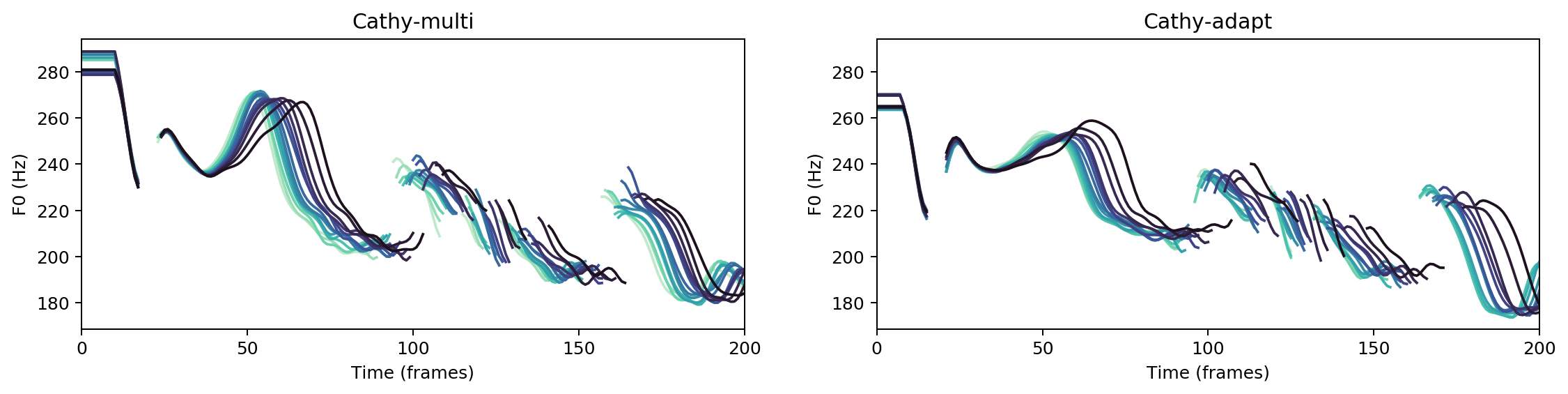

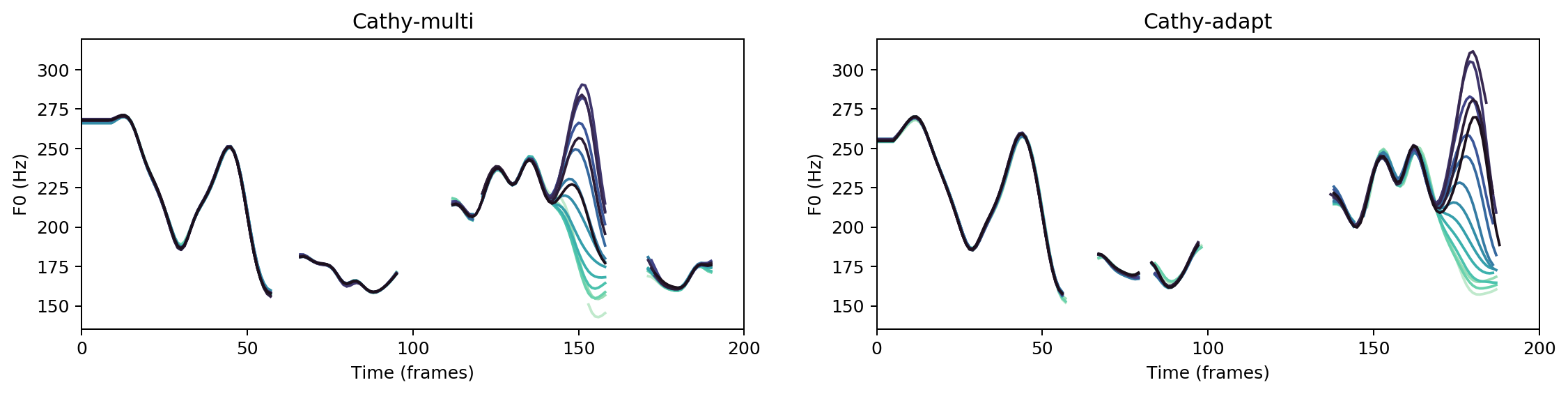

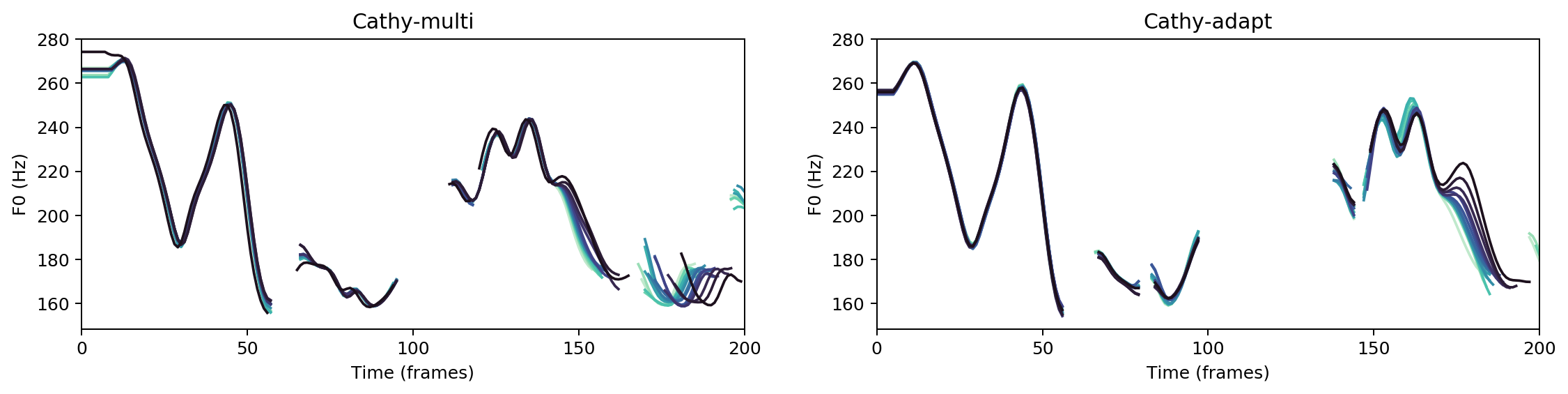

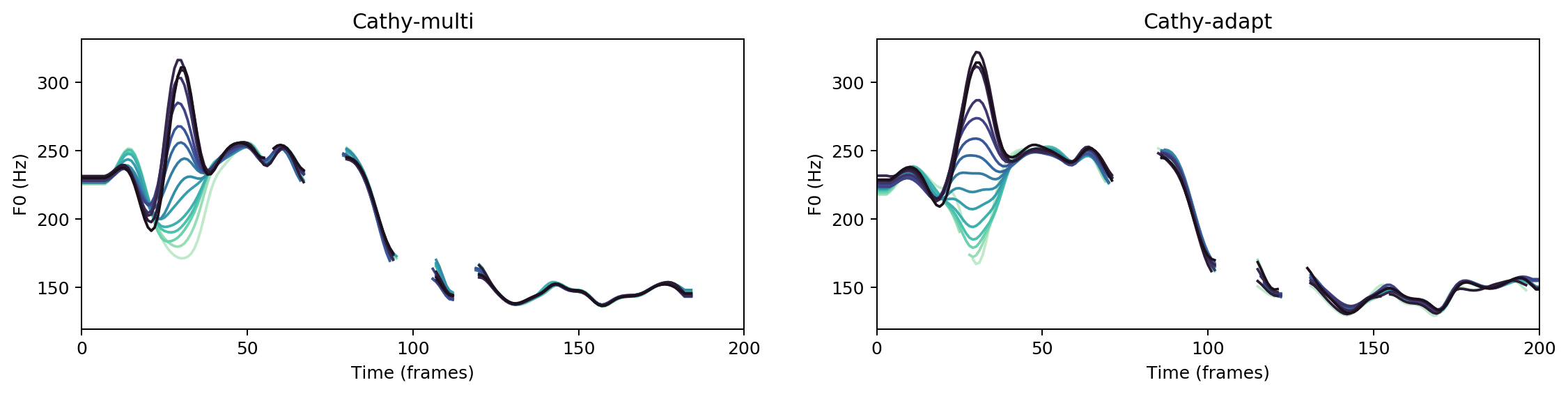

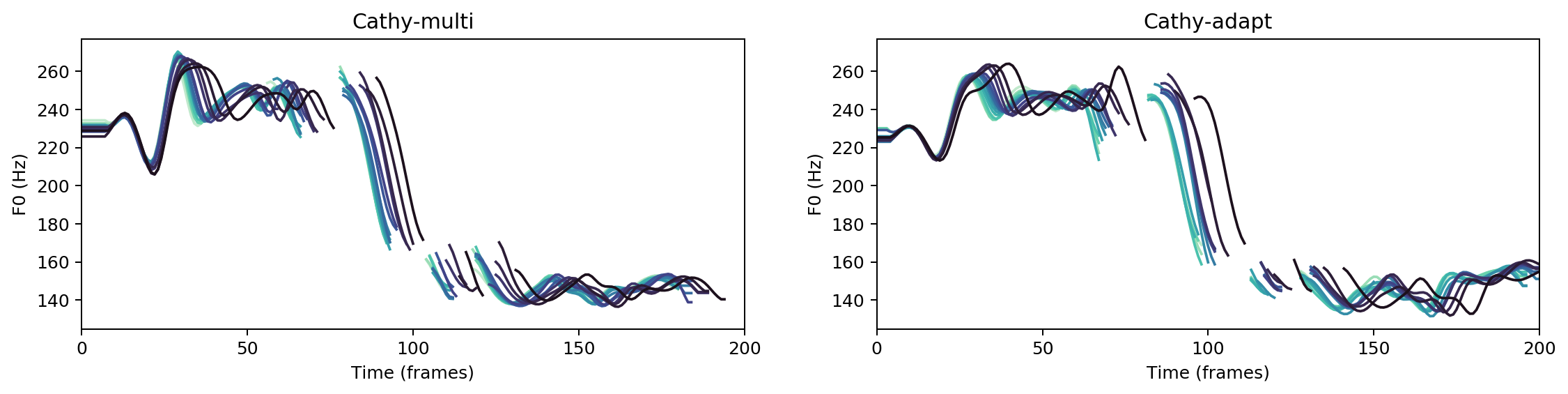

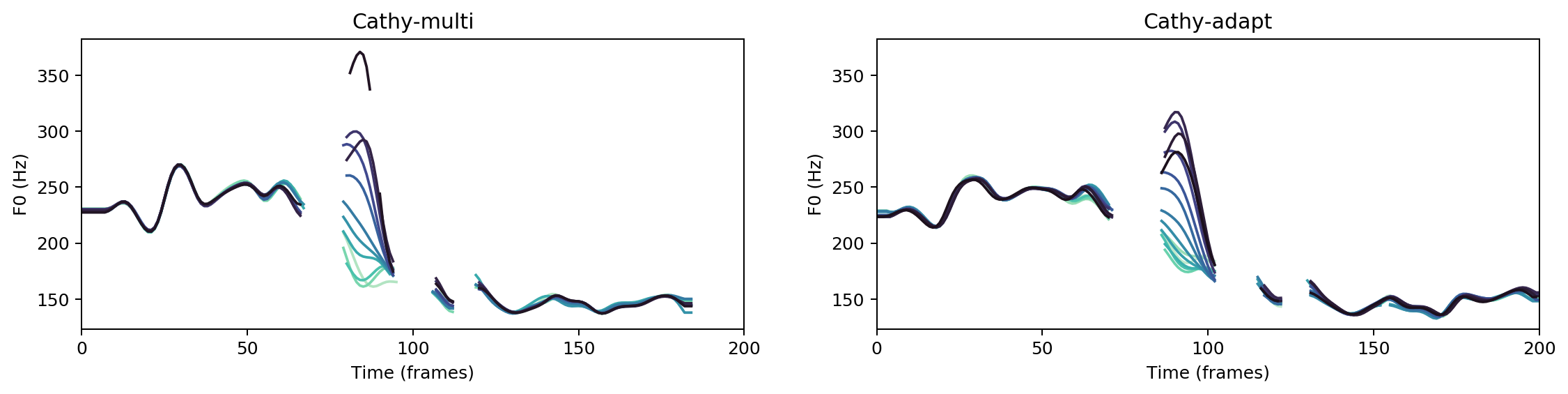

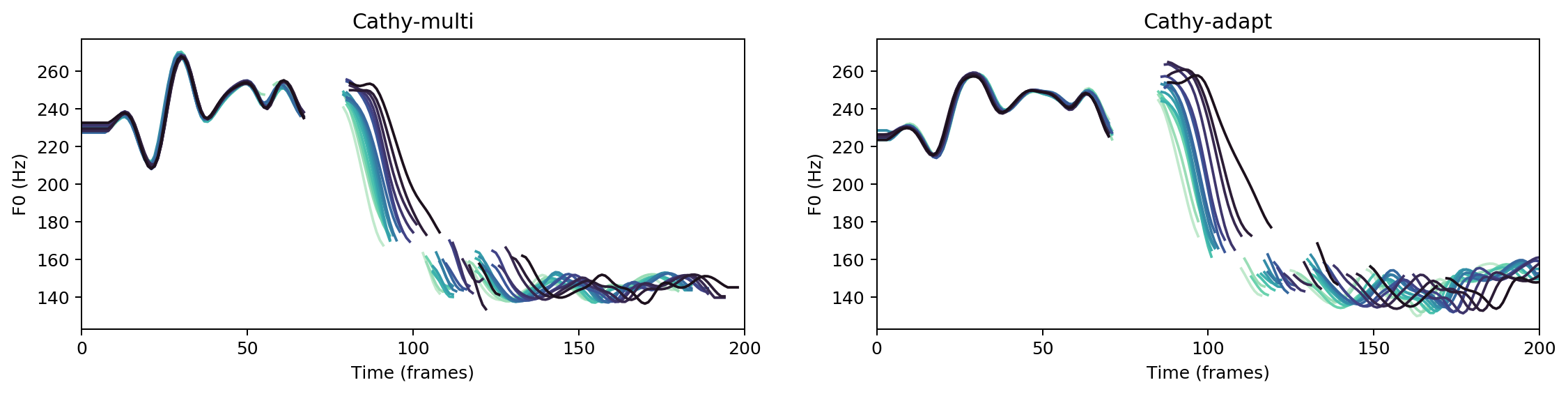

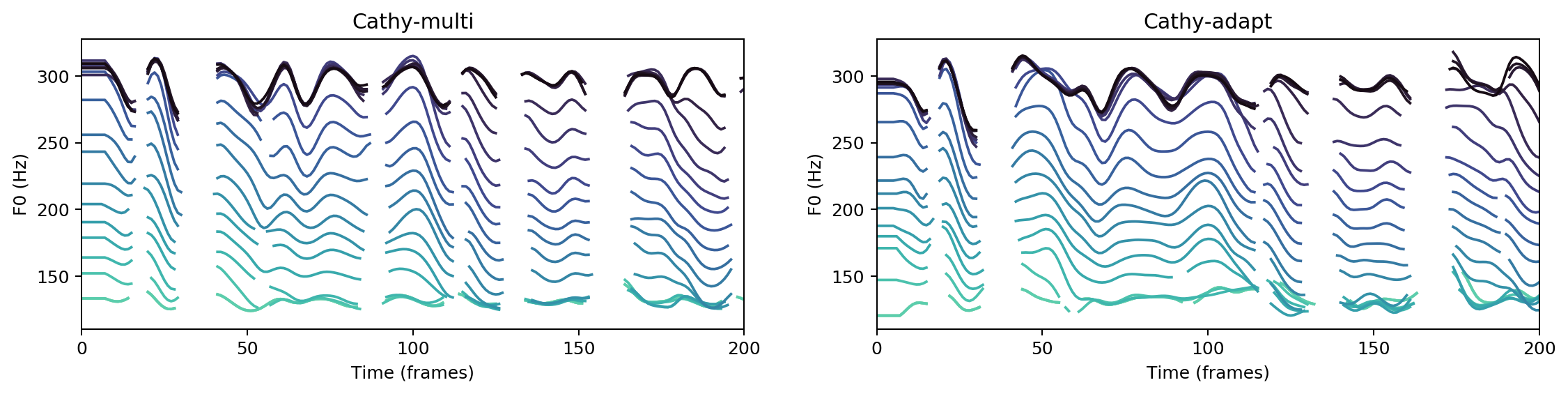

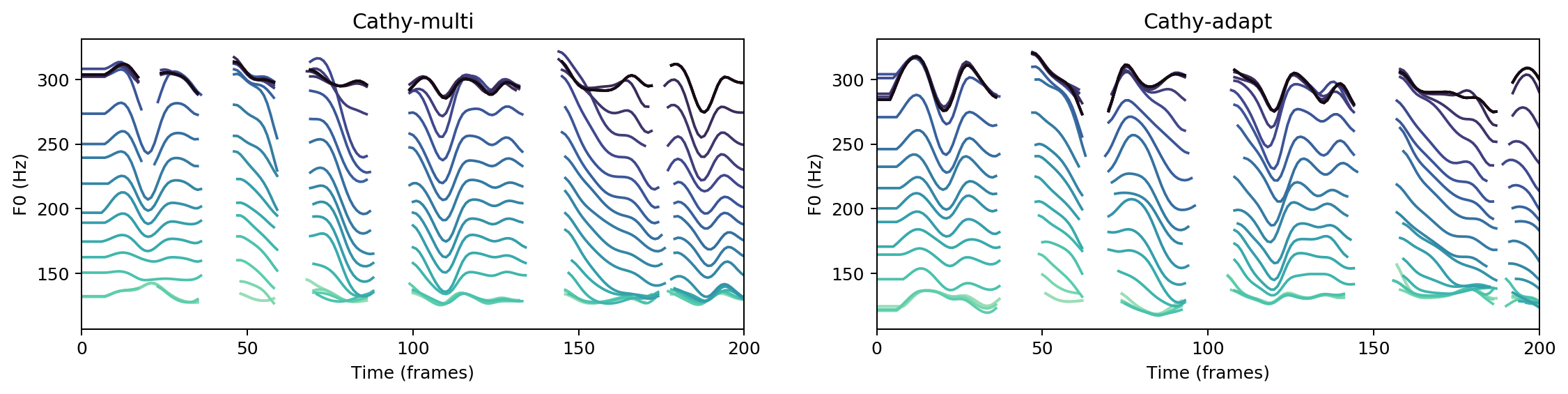

Abstract: This paper presents a method for phoneme-level prosody control of F0 and duration on a text-to-speech setup, which is based on prosodic clustering. An autoregressive attention-based model is used, incorporating a prosody encoder module which is fed discrete prosodic labels. Phoneme-level F0 and duration features are extracted from the speech data and discretized using unsupervised clustering in order to produce a sequence of prosodic labels. Prosodic control range and coverage is shown to be improved by utilizing augmentation, F0 normalization, balanced clustering for duration and speaker-independent clustering in a multispeaker setup. The final model enables fine-grained phoneme-level prosody control for all speakers contained in the training set, while maintaining the speaker identity. A prior prosody encoder is also trained, which learns the style of each speaker and enables speech synthesis without the requirement of reference audio. The model is also fine-tuned to unseen speakers with limited amounts of data and it is shown to maintain its prosody control capabilities, verifying that the speaker-independent prosodic clustering is effective. Experimental results verify that the model maintains high output speech quality and that the proposed method allows efficient prosody control within each speaker's range despite the variability that a multispeaker setting introduces.